Benson Duong

Data Science major at UC San Diego

Interests in Data Science, Python Programming, Machine Learning.

Deep Learning Image Auto-Captioner with PyTorch

- Our team included Takuro Kitasawa, Rye Gleason, Jeremy Nurding, and myself.

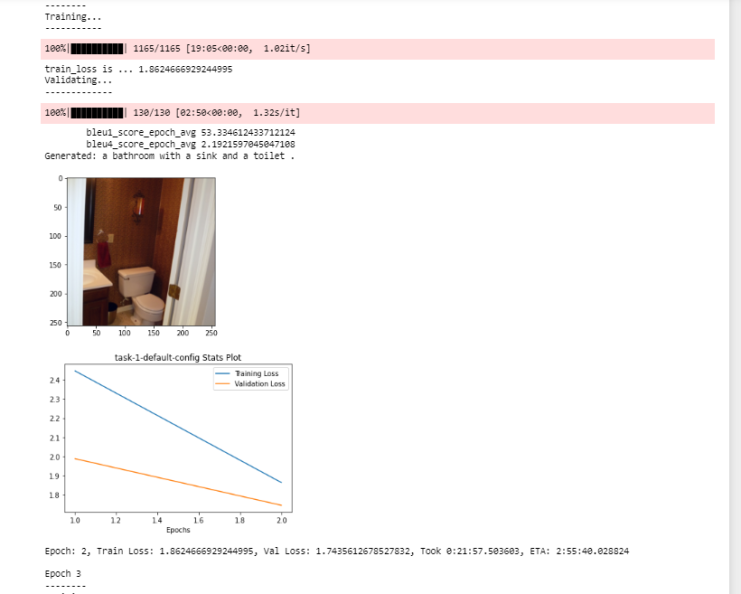

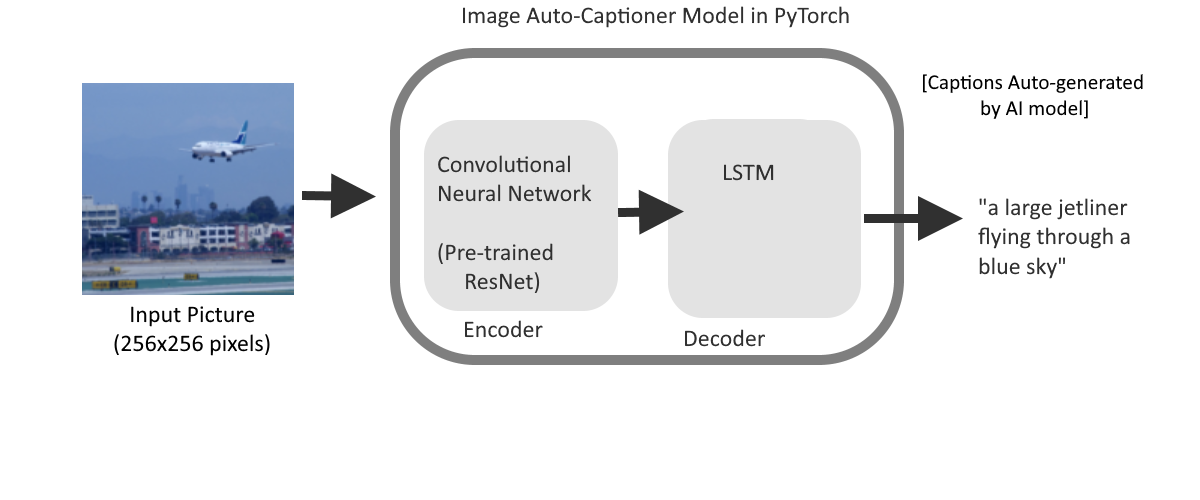

- In this project, we developed and trained an AI generative model that could take in input images, and auto-generate English captions properly describing the image’s content with correct grammar.

- Having to figure out the proper custom PyTorch Architecture, we eventually developed several versions of this model:

- The model architecture connects either a custom Convolutional Neural Network or a pre-trained ResNet to an LSTM Recurrent Neural Network.

- This custom Convolutional Neural Network Architecture was called AlexNet (Not shown in the flowchart above). I was the teammate responsible for implementing it’s architecture on PyTorch. Since it was not pre-trained, the model variant that used it trained much slower.

- Regardless, each image needs to undergo another Dense Layer to a shape compatible with the convolutional neural network’s input.

- The Convolutional Neural Network serves as the Encoder, and the LSTM serves as the Decoder

- The LSTM is originally trained with Teacher-forcing. Each auto-generated sentence is capped at 20 words.

- The model architecture connects either a custom Convolutional Neural Network or a pre-trained ResNet to an LSTM Recurrent Neural Network.

- Using UCSD’s Datahub server, this trained for up to 6 hours, several times for different configurations of parameters.